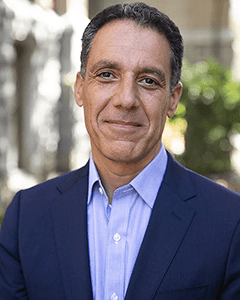

Anne Churchland

Professor of Neurobiology in the David Geffen School of Medicine at the University of California, Los Angeles (UCLA).

Anne Churchland received her B.A. in mathematics and psychology from Wellesley College. She received her Ph.D. in Neuroscience from the University of California, San Francisco (2003), working with Steve Lisberger on Representations of Eye and Image Velocity in Extrastriate Cortex. She transitioned to postdoctoral training at the University of Washington (2004 – 2010) working with Mike Shadlen on perceptual decisions in the Lateral Intraparietal area. She was a principal investigator in neuroscience at Cold Spring Harbor Laboratory from 2010 until she joined the UCLA faculty in May 2020.

The Churchland laboratory investigates the neural circuits that support decision-making. When making decisions, humans and animals can flexibly integrate visual signals with other sources of information before committing to action. The ability to flexibly use incoming information distinguishes decisions from reflexes, offering a tractable entry point into more complex cognitive processes defined by flexibility, such as abstract thinking, reasoning and problem-solving.

To understand the neural mechanisms that support decision-making, the Churchland lab measures and manipulates neurons in cortical and subcortical areas while animals make decisions about visual (and sometimes auditory of multisensory) signals. To connect the neural responses with behavior, her lab uses mathematical analyses aimed at understanding how information is represented at the level of neural populations, both at a given moment and over time. Recent work has demonstrated that in diverse cell types, sensory and movement signals co-modulate neural activity, even in trained experts. The timing of movement signals is informative about the animal’s internal state of engagement.

Churchland has received research awards from the McKnight Foundation, the Pew Charitable Trusts, The Merck Foundation and the Klingenstein-Simons Foundation. She received the James M and Cathleen D Stone award for significant research accomplishments (Cold Spring Harbor Laboratory, 2020), the Janett Rosenburg Trubatch Career Development Award (Society for Neuroscience, 2012) for demonstrating originality and creativity in research. Her mentoring efforts have been recognized by the Louise Hanson Marshall Special Recognition Award for outstanding dedication to promoting the professional development of women (Society for Neuroscience, 2017) and the UCLA Excellence in Mentoring Award (2024). To broaden the impact of her lab’s efforts, Churchland maintains a YouTube channel and a TikTok stream featuring scientific content aimed at diverse audiences.

The intersection of vision and movements in the mammalian brain

Saturday, May 17, 2025, 7:30 – 8:30 pm, Talk Room 1-2

Human and animal movements are often viewed as a nuisance that “muddies the waters” of efforts to link visual inputs to cognitive processes like decision-making. Such movements are often prevented via hardware, “regressed out” in analysis or simply ignored. However, in naturalistic circumstances, animals and humans make frequent movements during perceptual and cognitive tasks. These include large movements that optimally position the sensor (e.g., the fovea), or smaller, high frequency movements that add spectrotemporal content to a stimulus. Importantly, recent work has demonstrated that movements impact neural activity in early sensory areas, even in experts engaged making visual decisions. In mice, cell-type specific measurements have demonstrated that movements modulate not only neurons that project corticocortically, but also neurons that project to subcortical targets, suggesting that cortical neurons broadcast movement signals throughout the brain. Further, movements modulate neural activity in both head-fixed and freely moving rodents, arguing that movements shape activity in multiple contexts. These observations raise major outstanding questions about the nature of the movement signals, and they extent to which they reflect altered sensory inputs, efference copies, or underlying latent states. Emerging work using a novel, visual accumulation of evidence task that recruits and requires primary and secondary visual cortices begins to shed light on these questions. Taken together, this growing body of observations about the intersection of vision and movements calls for a new framework that acknowledges the diverse ways in which dynamic interactions with the environment can benefit both sensory processing and decision-making.

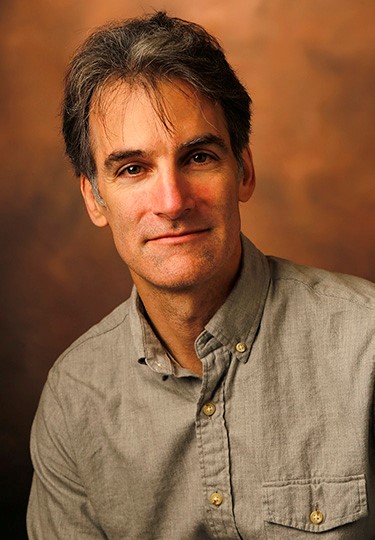

Suzana Herculano-Houzel

Suzana Herculano-Houzel William T. Freeman

William T. Freeman Kenneth C. Catania

Kenneth C. Catania Katherine J. Kuchenbecker

Katherine J. Kuchenbecker